Exclude content from search engines

There can be various reasons for wanting to exclude content from search engines, one reason might be that it is an Optimizely integration or preproduction environment, or some other testing environment.

Robots.txt

There has long been a misconception that the best way to prevent Google and other search engines from indexing a website is to use a robots.txt file. If you want to prevent Google from crawling the site, you can place such a robots.txt file at the root level.

User-agent: Googlebot

Disallow: /And if you want to stop all crawlers that care to follow the directive, the file can look like this.

User-agent: *

Disallow: /The problem with robots.txt is that it only tells search engines not to crawl the website for content to index. They will still be able to index pages if the search engine comes across them in other ways, e.g., via incoming links from other websites.

Instead, use one of the methods below.

Robots meta tag

Robots meta tags can be included as part of the markup on the individual page and placed within the <head> element. There are various parameters you can use, but the most relevant are noindex and nofollow. These parameters respectively request that the page should not be indexed, and that crawlers should not follow links further from the page.

Example of a meta tag that prevents indexing and further following of links.

<meta name="robots" content="noindex, nofollow">X-robots-tag

The last alternative, unlike the previous one, is not a tag – even though the name suggests it. X-robots-tag is an HTTP header.

The fact that this information is sent as a header, and not as part of the page as markup, means that this method is also suitable for other content than HTML. This works just as well for images, PDF documents, and video files.

X-robots tag can be used with the same two parameters as the robots meta tag.

x-robots-tag: noindex, nofollowThe header can be added in startup.cs/program.cs like this in new .NET.

app.Use(async (context, next) =>

{

context.Response.Headers.TryAdd("x-robots-tag", "noindex, nofollow");

});Or in web.config like this in old .NET Framework.

<system.webServer>

<httpProtocol>

<customHeaders>

<add name="x-robots-tag" value="noindex, nofollow" />

</customHeaders>

</httpProtocol>

</system.webServer>If the damage is already done

If Google has indexed your content and you want to remove it, the absolute worst thing you can do is to add a robots.txt file that blocks Google out. Then Google will not be able to update the index, regardless of whether you make any of the other suggestions above.

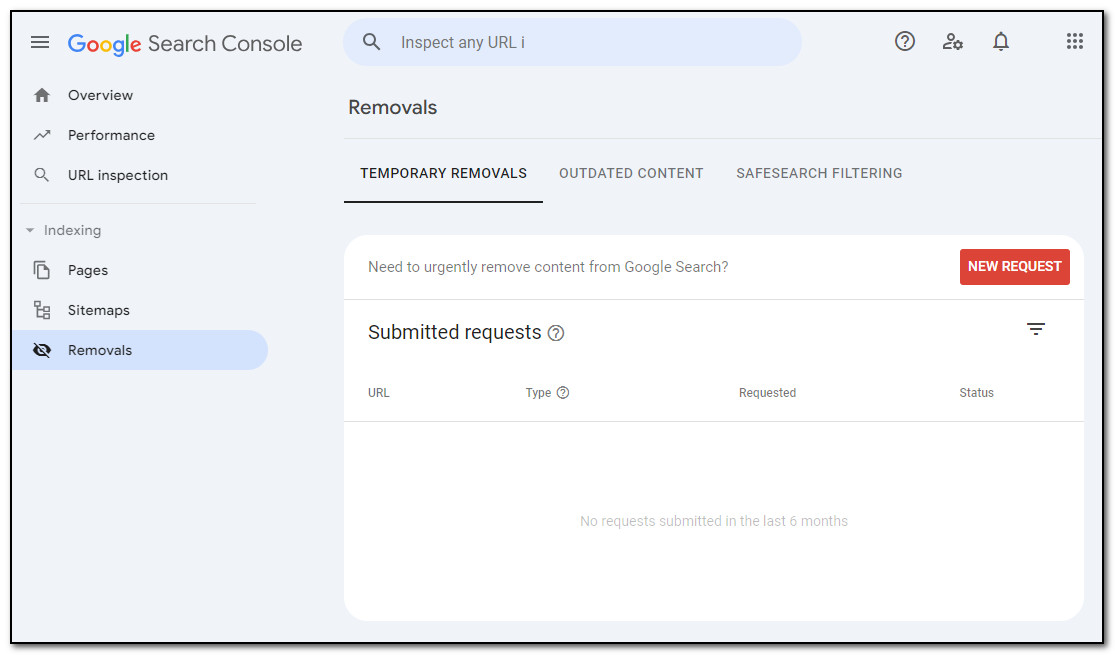

If you do not just want to wait until the next time Google crawls the website, but want to speed things up, you can use the removal tool in Google Search Console.

Conclusion

The safest ways to keep content away from search engines are:

- Do not publish content on the internet

- Put the content behind a login, IP block, or similar

If you still want to have content on the internet, but without search engines snooping around, use robots meta tag, or x-robots-tag.