Episerver image anonymization using Microsoft Cognitive Services and Face API

With the introduction of the General Data Protection Regulation (GDPR) storing personal information is not something that should be taken lightly. Using Microsoft Cognitive Services it is possible to identify what's in the images that editors, or users, upload to your site. You may then choose to take action, based on the identified content and your policy. Using this approach you may block uploading of adult content, you may flag images containing faces, and you might even modify images during upload if they meet specific criteria.

I wanted to see if it was possible to automatically censor the eye region of images containing faces, during upload in the Episerver editorial interface. To accomplish this, I will be using the Face API to detect faces and face landmarks.

The result: a censored picture of myself.

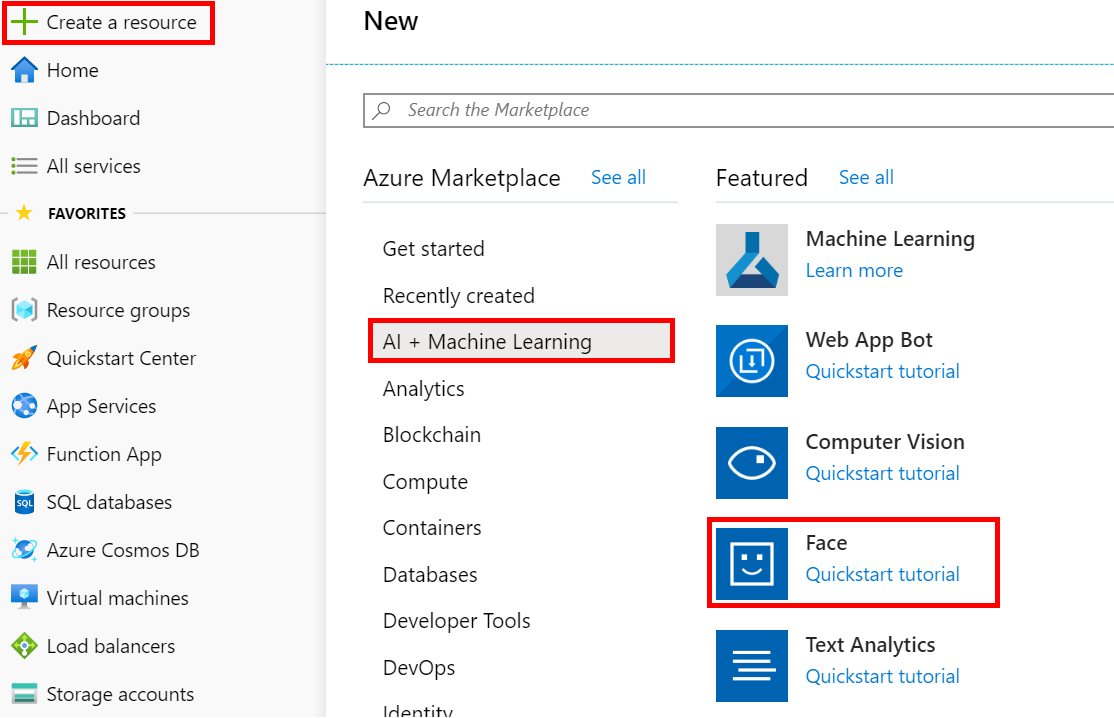

I'll start by creating an Azure Cognitive Services resource using the Azure portal. It's as easy as clicking here, here and here:

Add the API-key and endpoint URL to web.config like this:

<add key="Gulla.EpiserverCensorFaces:CognitiveServices.SubscriptionKey" value="xxxxxxxxxx" />

<add key="Gulla.EpiserverCensorFaces:CognitiveServices.Endpoint" value="https://xxxxxxxxxx.cognitiveservices.azure.com/" />Next, I install the Face API client library from NuGet:

Install-Package Microsoft.Azure.CognitiveServices.Vision.Face -Version 2.5.0-preview.1Then, I need to hook into the Episerver event that gets fired when content is created. I'll do this using an initialization module.

[ModuleDependency(typeof(EPiServer.Web.InitializationModule))]

public class ImageEventInitialization : IInitializableModule

{

public void Initialize(InitializationEngine context)

{

var events = context.Locate.ContentEvents();

events.CreatingContent += CreatingContent;

}

private static void CreatingContent(object sender, ContentEventArgs e)

{

if (e.Content is ImageData imageData)

{

CensorFaceHelper.CensorBinaryData(imageData.BinaryData);

}

}

}I implement the method CensorBinaryData. It does three things:

- Detect faces

- Censor faces

- Update image blob

private static IFaceClient _client;

private const string SubscriptionKey = "{{xxx}}";

private const string Endpoint = "https://{{xxx}}.cognitiveservices.azure.com/";

private const string RecognitionModel = "recognition_02";

private const float CensorWidthModifier = 0.25f;

private const float CensorHeightModifier = 4;

private static IFaceClient Client =>

_client ?? (_client = new FaceClient(new ApiKeyServiceClientCredentials(SubscriptionKey))

{

Endpoint = Endpoint

});

public static void CensorBinaryData(Blob imageBlob)

{

var detectedFaces = GetFaces(imageBlob.OpenRead());

if (!detectedFaces.Any()) return;

var image = Image.FromStream(new MemoryStream(imageBlob.ReadAllBytes()));

var graphics = Graphics.FromImage(image);

foreach (var face in detectedFaces)

{

CensorFace(face, graphics);

}

UpdateImageBlob(imageBlob, image);

}The Face API client library handles the detection of faces. I simply specify the newest recognition model, lean back, and wait for the result.

private static IList<DetectedFace> GetFaces(Stream image)

{

var task = Task.Run(async () => await DetectFaces(image));

return task.Result;

}

private static async Task<IList<DetectedFace>> DetectFaces(Stream image)

{

return await Client.Face.DetectWithStreamAsync(image,

recognitionModel: RecognitionModel,

returnFaceLandmarks: true);

}Censoring the eyes is done by drawing a line across the eyes.

private static void CensorFace (DetectedFace face, Graphics graphics)

{

graphics.DrawLine(

GetCensorPen(face),

GetCensorLeftPoint(face),

GetCensorRightPoint(face));

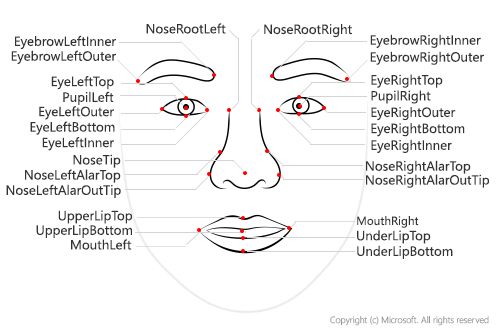

}I need to calculate the size of the line, based on the following face landmarks returned from the Face API.

The width of the line is calculated based on the tallest eye, multiplied with a constant. The length of the line is based on the distance between the outermost part of the eyes, multiplied by a constant.

private static Pen GetCensorPen(DetectedFace face)

{

return new Pen(new SolidBrush(Color.Black), GetCensorHeight(face));

}

private static float GetCensorHeight(DetectedFace face)

{

return CensorHeightModifier * (float)Math.Max(

Math.Abs(face.FaceLandmarks.EyeLeftTop.Y - face.FaceLandmarks.EyeLeftBottom.Y),

Math.Abs(face.FaceLandmarks.EyeRightTop.Y - face.FaceLandmarks.EyeRightBottom.Y)

);

}

private static PointF GetCensorLeftPoint(DetectedFace face)

{

var rightEyeX = face.FaceLandmarks.EyeRightOuter.X;

var leftEyeX = face.FaceLandmarks.EyeLeftOuter.X;

var leftEyeY = face.FaceLandmarks.EyeLeftOuter.Y;

var rightEyeY = face.FaceLandmarks.EyeRightOuter.Y;

return new PointF(

(float)leftEyeX - CensorWidthModifier * (float)(rightEyeX - leftEyeX),

(float)leftEyeY - CensorWidthModifier * (rightEyeY < leftEyeY ? 1 : -1) * (float)(rightEyeY - leftEyeY));

}

private static PointF GetCensorRightPoint(DetectedFace face)

{

var rightEyeX = face.FaceLandmarks.EyeRightOuter.X;

var leftEyeX = face.FaceLandmarks.EyeLeftOuter.X;

var leftEyeY = face.FaceLandmarks.EyeLeftOuter.Y;

var rightEyeY = face.FaceLandmarks.EyeRightOuter.Y;

return new PointF(

(float)rightEyeX + CensorWidthModifier * (float)(rightEyeX - leftEyeX),

(float)rightEyeY + CensorWidthModifier * (leftEyeY < rightEyeY ? 1 : -1) * (float)(leftEyeY - rightEyeY));

}Finally, the image is updated.

private static void UpdateImageBlob(Blob imageBlob, Image image)

{

using (var ms = new MemoryStream())

{

image.Save(ms, image.RawFormat);

var imageBytes = ms.ToArray();

using (var ws = imageBlob.OpenWrite())

{

ws.Write(imageBytes, 0, imageBytes.Length);

}

}

}The result using an example image from the Episerver Alloy solution. The faces that are recognized by the Face API are censored.

A banner image from the Episerver Alloy solution, censored

The full source code is available on GitHub and a NuGet package is available on nuget.org.

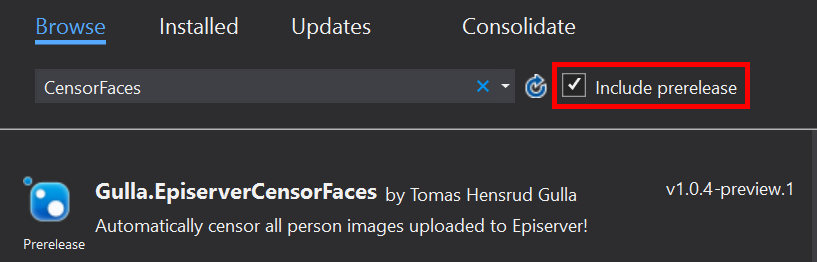

Because one of the dependencies is a prerelease of Microsoft.Azure.CognitiveServices.Vision.Face, you must tick the checkbox «Include prereleases» in Visual Studio.